Concurrency is NOT Parallelism

(4 Minutes) | Concurrency vs Parallelism — They Are Not The Same

Get our Architecture Patterns Playbook for FREE on newsletter signup:

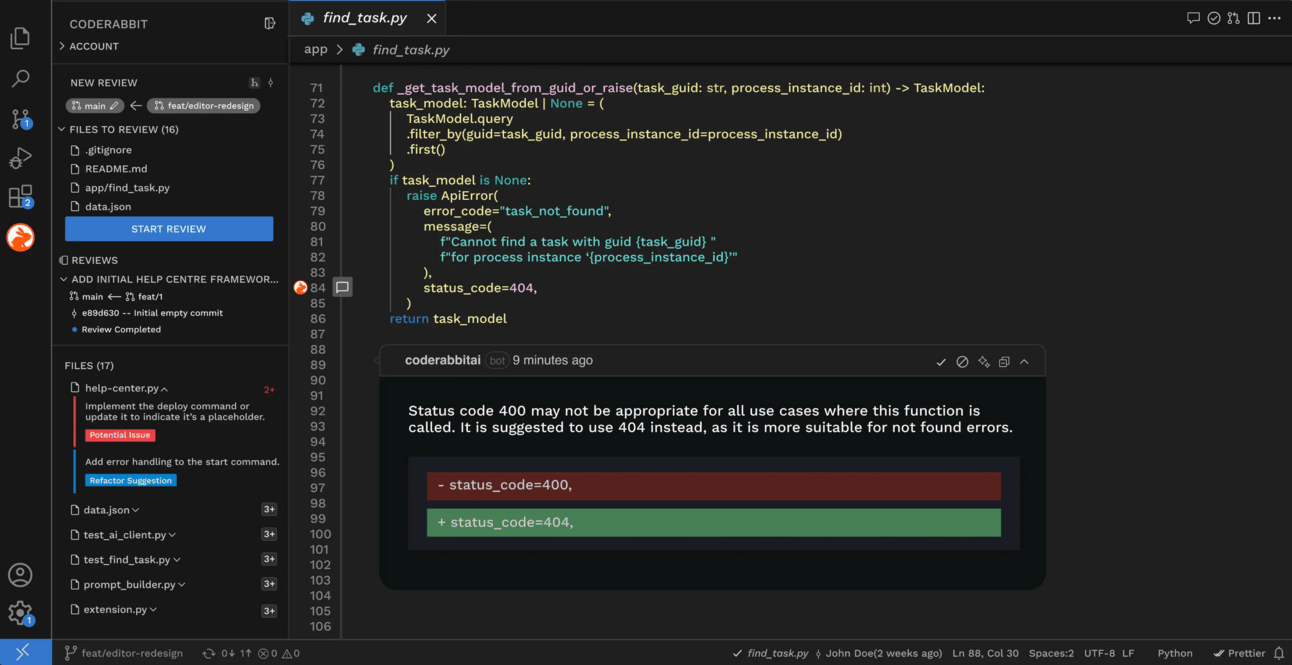

Free AI Code Reviews in your IDE Powered by CodeRabbit

Presented by CodeRabbit

CodeRabbit delivers free AI code reviews in VS Code, Cursor, and Windsurf. Instantly catches bugs and code smells, suggests refactorings, and delivers context aware feedback for every commit, all without configuration. Supports all programming languages; trusted on 12M+ PRs across 1M repos

Concurrency vs Parallelism: Designing Efficient Systems

Parallelism and concurrency are two terms that often create confusion.

One is about managing multiple tasks at once, interleaving them to optimize resource usage.

The other is about executing multiple tasks at the same time.

“Concurrency is about dealing with lots of things at once. Parallelism is about doing lots of things at once.” - Rob Pike, Golang Co-Creator

Concurrency: Managing Tasks Efficiently

Concurrency structures a system to handle multiple tasks by interleaving them. Even on a single core, a scheduler rapidly switches between tasks—especially useful when tasks involve waiting (eg; I/O operations).

Runs on a single core, but requires software support for scheduling and task switching

Ideal for I/O-bound workloads

Introduces context-switching overhead

Concurrency is a design choice about how tasks are organized and coordinated.

Parallelism: Simultaneous Task Execution

Parallelism distributes tasks across multiple CPU cores to run them at the same time, dramatically improving compute-bound performance.

Requires hardware (multi-core CPUs) and software support (eg; thread pools, task runtimes)

Ideal for CPU-bound workloads

Less effective for I/O-heavy tasks

Parallelism is an execution strategy focused on how many things are done at once.

How They Relate

Concurrency is often a precursor to parallelism. You can have concurrency without parallelism (eg; Node.js on a single thread), but parallelism typically builds on a well-designed concurrent structure.

Modern systems combine both for responsiveness and throughput.

Concurrency coordinates tasks waiting on external resources.

Parallelism speeds up internal compute tasks within those workflows.

Synchronization & Pitfalls

When concurrent or parallel tasks share resources, synchronization is essential to ensure correctness.

Mutex: Allows only one thread at a time to access a resource

Semaphore: Allows a limited number of threads to access a resource concurrently

Poor synchronization can lead to:

Race conditions: Shared state is accessed or modified without proper coordination, leading to nondeterministic behavior

Deadlocks: Two or more tasks wait on each other indefinitely

Starvation: Some tasks are continually denied access to a resource due to unfair scheduling or contention

These primitives prevent such issues but can introduce contention, especially under high load or coarse-grained locking.

Real-World Use Cases

Responsive UI: Async callbacks fetch data without blocking the main thread (concurrency)

Servers & Load Balancers: Event loops and thread pools manage high request volumes (concurrency, parallelism)

Databases: Connection pooling and sharding enable concurrent handling and parallel query execution (concurrency, parallelism)

Measuring & Tuning

When applying concurrency or parallelism, measure:

Latency – for responsiveness

Throughput – for CPU-bound performance

Context switch overhead – too much concurrency can degrade performance

CPU utilization – are cores being used efficiently?

Choosing the right strategy means balancing these metrics against your workload characteristics.

Final Thoughts

Concurrency structures how tasks run.

Parallelism accelerates how many run at once.

Used together, and measured intentionally, they unlock systems that are both responsive and high-throughput.

Subscribe to get simple-to-understand, visual, and engaging system design articles straight to your inbox: