Top 5 Database Caching Strategies You Should Know

(5 minutes) | Speed gains, consistency risks, and real trade-offs

Get our Architecture Patterns Playbook for FREE on newsletter signup. Plus, receive our System Design Handbook for FREE when it launches in February:

Coder: Secure Environments for Developers and Their Agents

Presented by Coder

Local development works because humans are trusted by default. AI agents inherit that same environment, with access to secrets, configs, and systems. Once agents do real work, teams need governed environments with clear boundaries. Coder brings developers and coding agents together on your infrastructure, open source and deployable anywhere, without compromising security or performance. It’s open source (113k stars) with a completely free Community Edition anyone can run.

Top 5 Database Caching Strategies You Should Know

Caching is a core performance optimization technique.

Done well, caching reduces latency, protects your database, and improves throughput. Done poorly, it introduces stale reads, hidden consistency bugs, and memory waste that only shows up in production.

The problem is not whether to cache.

It’s how.

Different caching strategies exist because systems have different access patterns, consistency needs, and failure tolerances.

Let’s dive into five of the most widely used caching strategies.

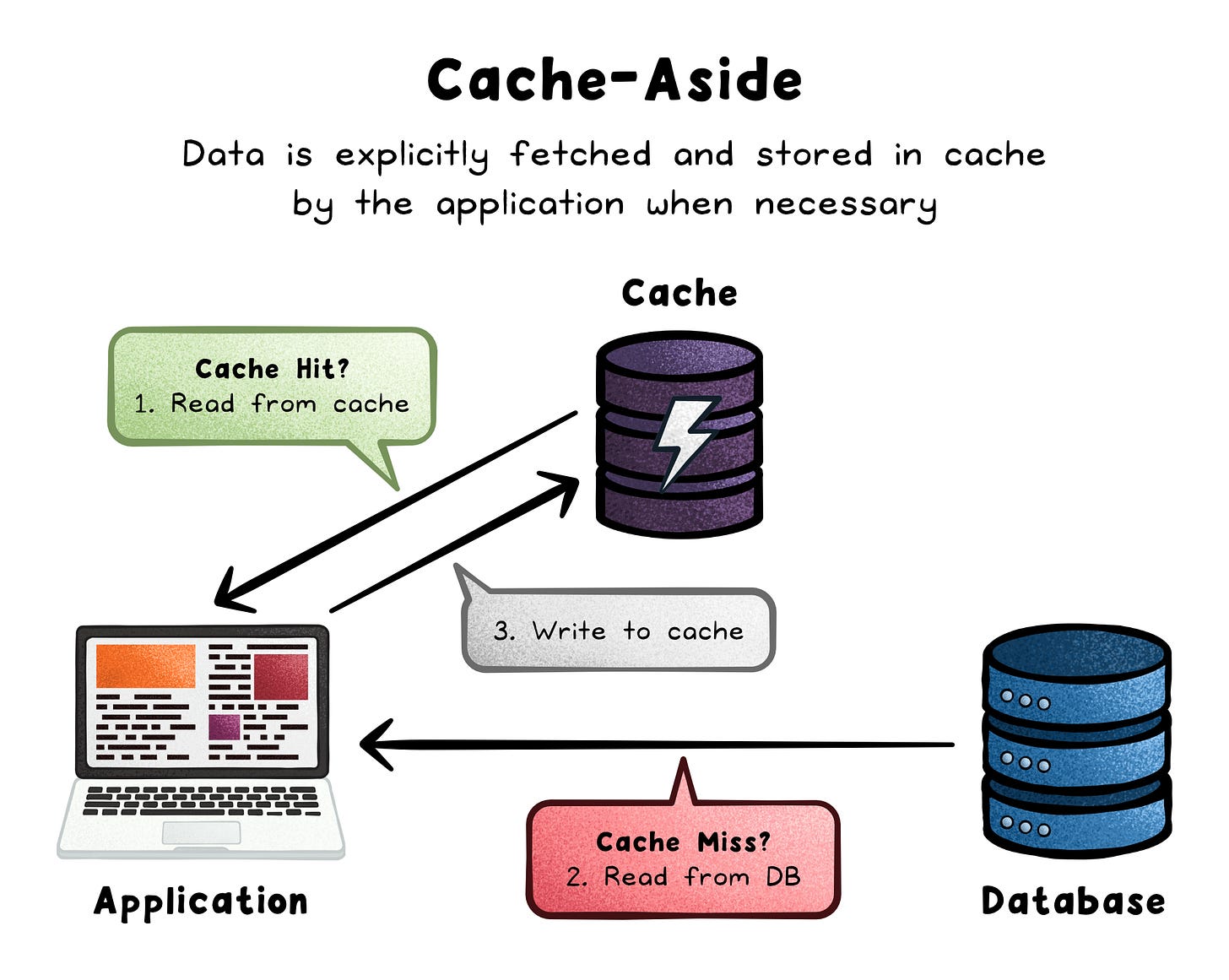

1) Cache-Aside

In a cache-aside strategy, the application explicitly manages reads and writes to the cache.

It queries the cache first, and on a cache miss, it fetches data from the database, returns it, and updates the cache.

The cache only stores data after it’s been read by the app.

Pros:

Full control → The application decides what to cache and when.

Demand-driven → You only cache data that someone asked for.

Flexible → Works well when access patterns are unpredictable.

Cons:

Consistency is your responsibility → You must handle invalidation correctly.

More application logic → Every read path needs cache awareness.

Cache-aside shines in systems where usage patterns are unpredictable or data freshness varies. However, beware that sloppy cache management can lead to stale reads or wasted memory.

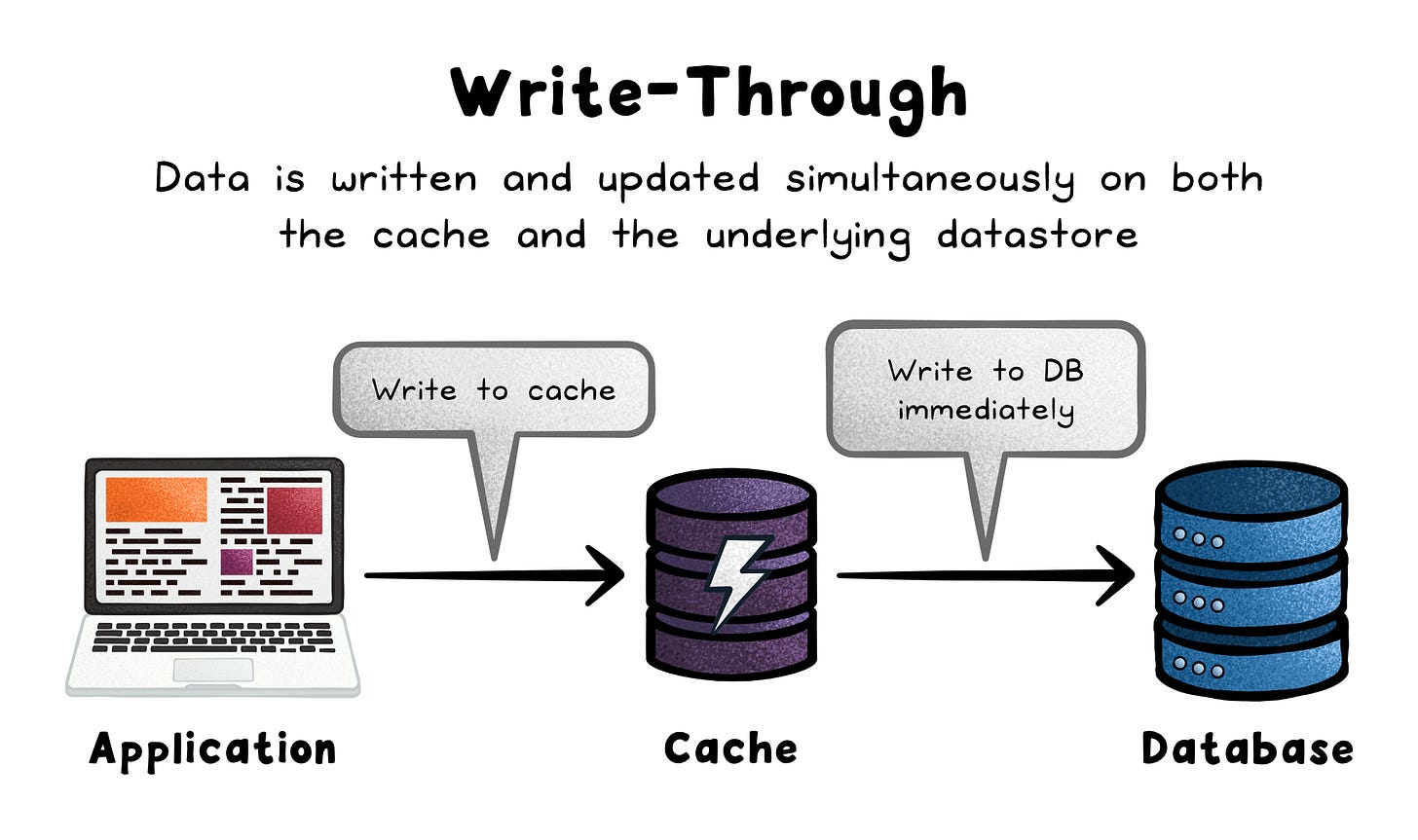

2) Write-Through

Write-through caching updates the cache and the database at the same time.

Every write goes through the cache first, which then synchronously persists the change to the database. Reads always hit a warm, up-to-date cache.

Pros:

Strong consistency → Cache and database never diverge.

Simple reads → No stale data surprises.

Predictable behavior → Fewer edge cases to reason about.

Cons:

Higher write latency → Every write incurs the cost of writing to two systems (cache and database).

Cache pollution → Cache can be flooded with infrequently read data.

Best used when data integrity and consistency are critical. Think e-commerce transactions or financial apps where stale reads aren’t an option.

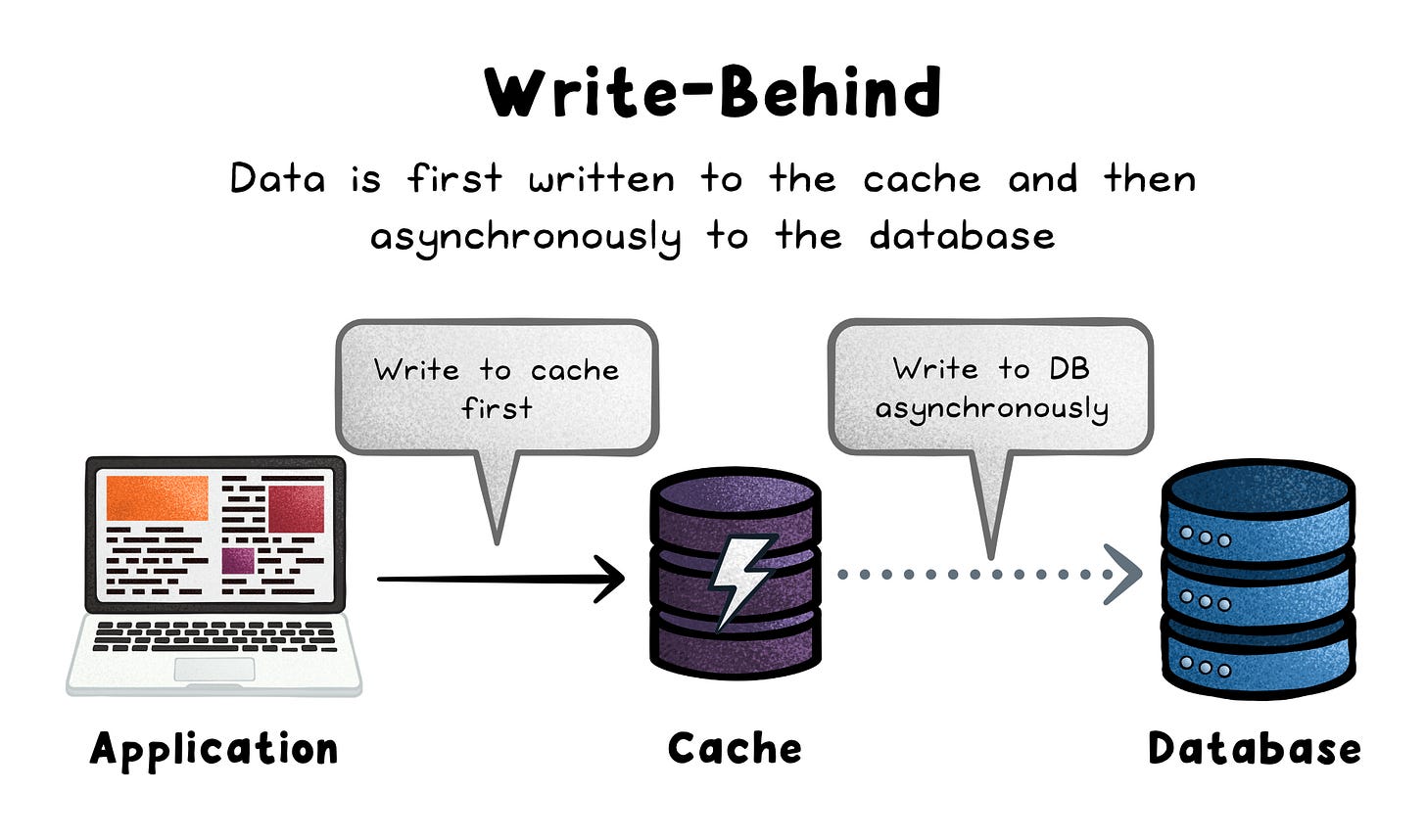

3) Write-Behind (Write-Back)

Write-behind (or write-back) caching updates the cache first and the database later in the background. “Dirty” data is flushed based on a schedule or specific triggers like time or batch size.

Pros:

Fast writes → The application doesn’t wait on the database.

Reduced database pressure → Writes are smoothed and batched.

High throughput → Ideal for write-heavy workloads.

Cons:

Data loss risk → Risk of data loss if the cache node crashes before the database syncs.

Harder debugging → Failures surface later, not at write time.

Write-behind is ideal for apps where slight delays in database updates are tolerable, like activity feeds, logs, or ephemeral data.

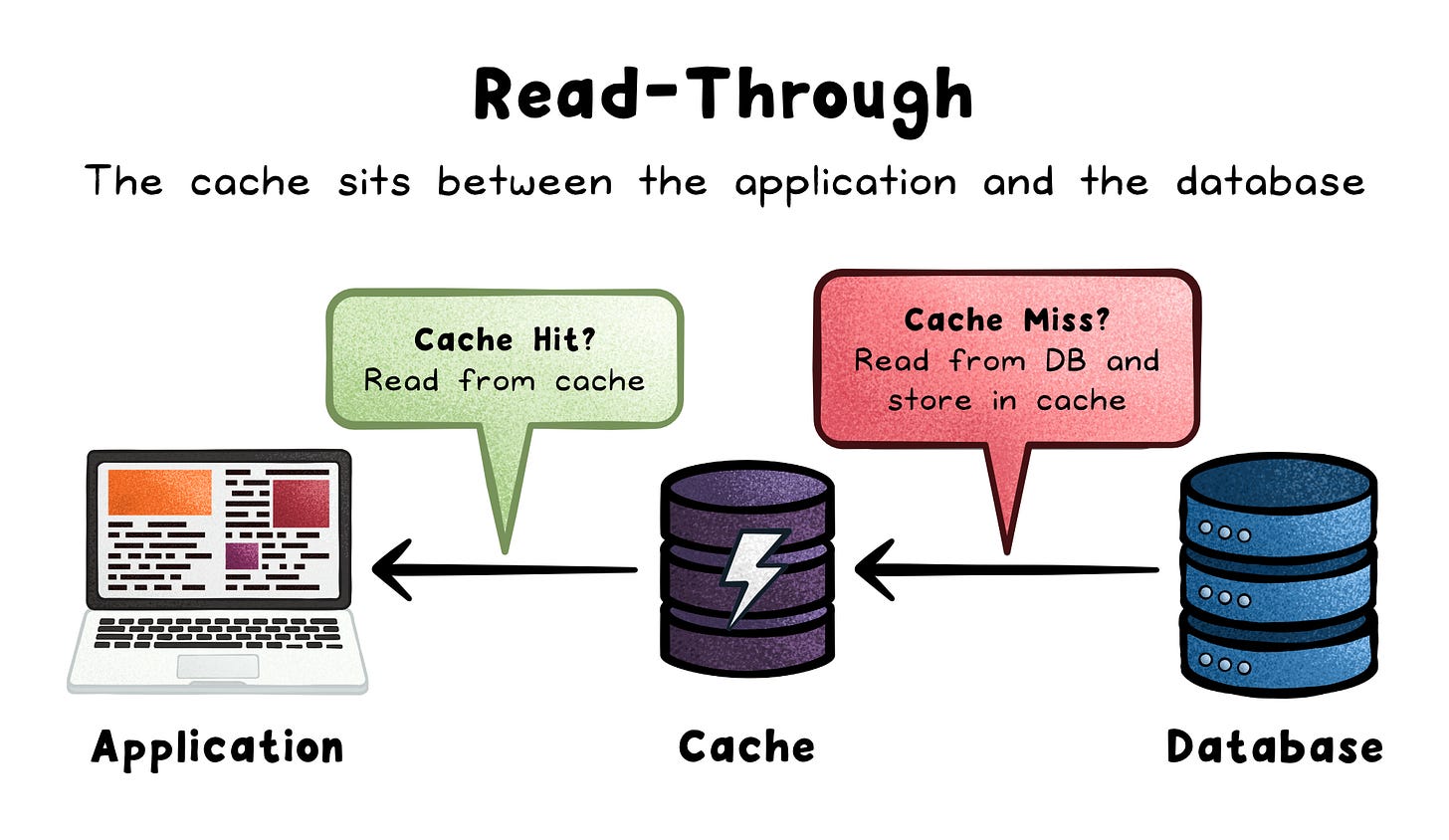

4) Read-Through

A read-through cache acts as a middle layer.

The application queries the cache directly, and on a miss, the cache fetches the data from the database, stores it in the cache, and returns it to the application.

Pros:

Cleaner application code → No explicit cache logic on reads.

Centralized behavior → Caching rules live in one place.

Efficient for repeated reads → Hot data stays warm automatically.

Cons:

Cold start penalty → Initial cache misses still hit the database, causing a slight delay.

Staleness risk → Requires careful TTLs or invalidation rules.

This is a solid choice for read-heavy applications with stable data, like content libraries or product catalogues.

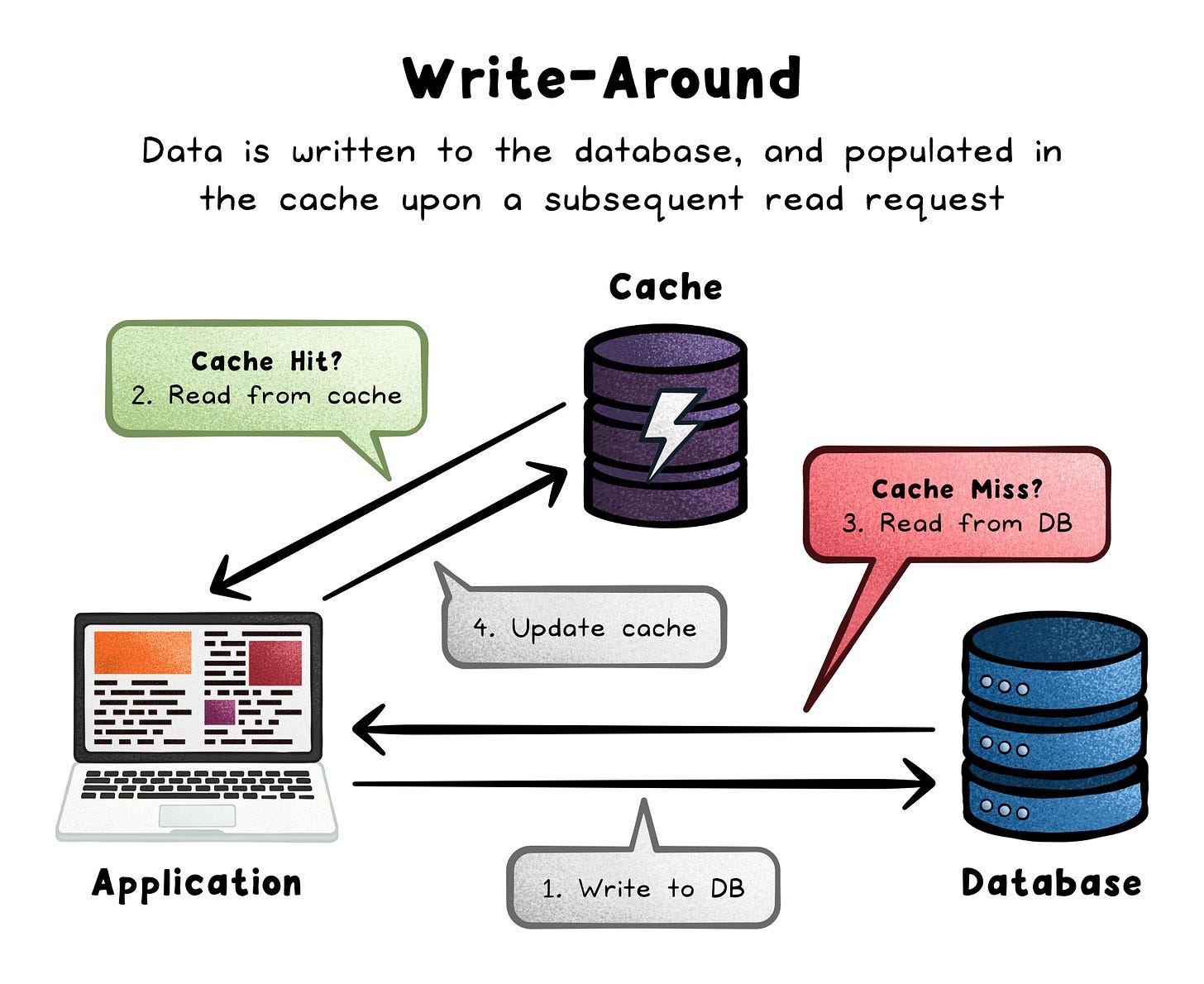

5) Write-Around

Write-around caching skips the cache during writes, sending data straight to the database.

Data enters the cache only when it’s read later. This means new or updated data isn’t cached until requested.

Pros:

Lean cache usage → Avoids caching data that may never be read.

Reduced churn → Write bursts don’t evict hot read data.

Simple write path → No cache coordination during writes.

Cons:

Guaranteed cache misses after writes → First reads after a write always miss the cache, causing read latency.

Uneven read latency → Inconsistent performance if reads for new data spike unexpectedly.

Best for apps with frequent writes and infrequent reads, like analytics logs or activity events.

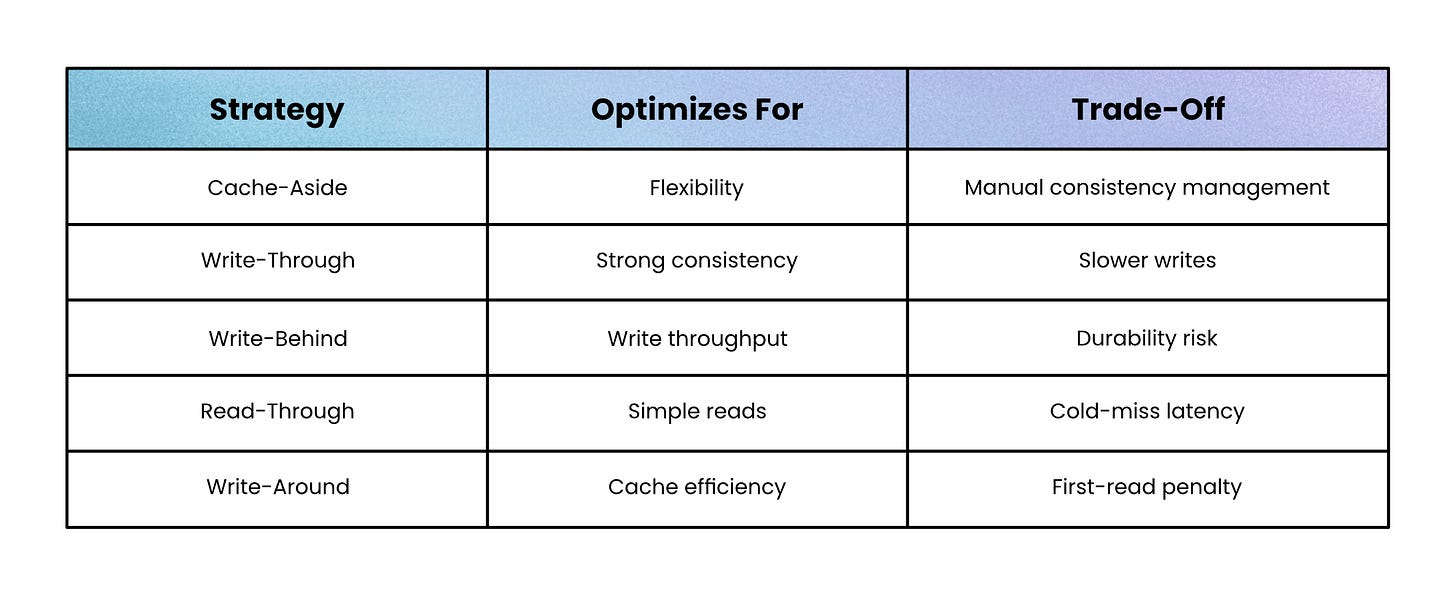

Choosing the right strategy

Each caching strategy comes with its own trade-offs. To decide which one fits your system, consider:

Read vs write ratio → Is your workload read-heavy, write-heavy, or balanced?

Consistency requirements → Can your system tolerate stale data, or is strict consistency a must?

Operational complexity → How much logic are you willing to manage in the application vs the cache layer?

Understanding these trade-offs is key to making caching an enabler, not a liability.

Final thoughts

Caching is not just a performance optimization.

It is part of your system’s correctness model.

The right strategy reduces load, smooths traffic, and keeps latency predictable. The wrong one quietly accumulates risk until a cache eviction, deployment, or incident exposes it.

Match your caching strategy to your access patterns, failure tolerance, and consistency needs; and your system will scale calmly instead of nervously.

👋 If you liked this post → Like + Restack + Share to help others learn system design.

Subscribe to get high-signal, clear, and visual system design breakdowns straight to your inbox:

This resonates with what I wrote a few months back,

Check the caching playbook here -

https://pradyumnachippigiri.substack.com/p/caching-playbook-for-system-design