How CDNs Turn Global Traffic Into Local Delivery

(6 Minutes) | When to Use Them, What to Cache and for How Long, and Common Mistakes, How They Work, and Benefits

Get our Architecture Patterns Playbook for FREE on newsletter signup:

AuthKit: Designed for Developers. Built for Enterprise.

Presented by WorkOS

Get a speedy and scalable enterprise ready login box customized to your design with AuthKit from WorkOS. This developer-friendly and extensible solution is fully compatible with any app architecture and offers enterprise-grade security with modern auth standards.

How CDNs Turn Global Traffic Into Local Delivery

Your site feels fast when you test it, but users across the world tell a different story.

Pages lag, images take seconds, and the backend suddenly looks overloaded. The problem isn’t your code: it’s distance.

Every request from a user has to travel across networks, data centers, and sometimes oceans to reach your origin server; adding milliseconds that stack into seconds.

A Content Delivery Network (CDN) fixes this by caching content near users instead of your server. Most requests hit nearby edge locations, not your origin, cutting latency and load in one move.

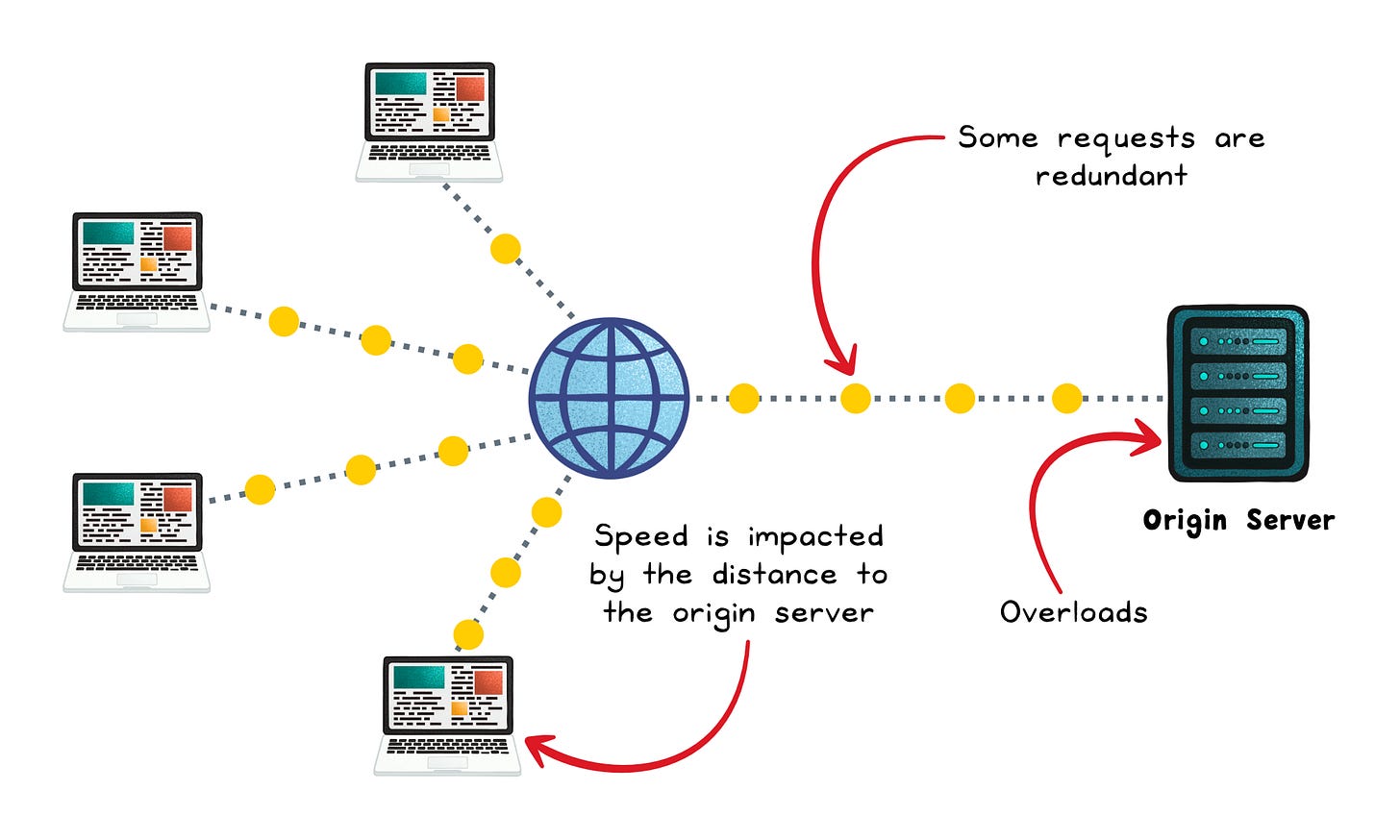

The Limits of a Single Origin

Without a CDN, every user request travels back to your origin server; a short trip for local users, but hundreds of milliseconds for those across the world.

Your origin serves everything: every image, stylesheet, and repeated script download.

When a launch or viral post hits, those redundant requests pile up, saturating bandwidth and slowing down the dynamic parts that matter most.

The result is a system that scales poorly and fails unevenly. Nearby users stay fast while everyone else stalls. Even caching headers or load balancers can’t fix it; they still depend on one overworked origin.

CDNs were built to solve this exact set of problems: distance that adds latency, redundancy that adds load, and architecture that makes “fast” local but not global.

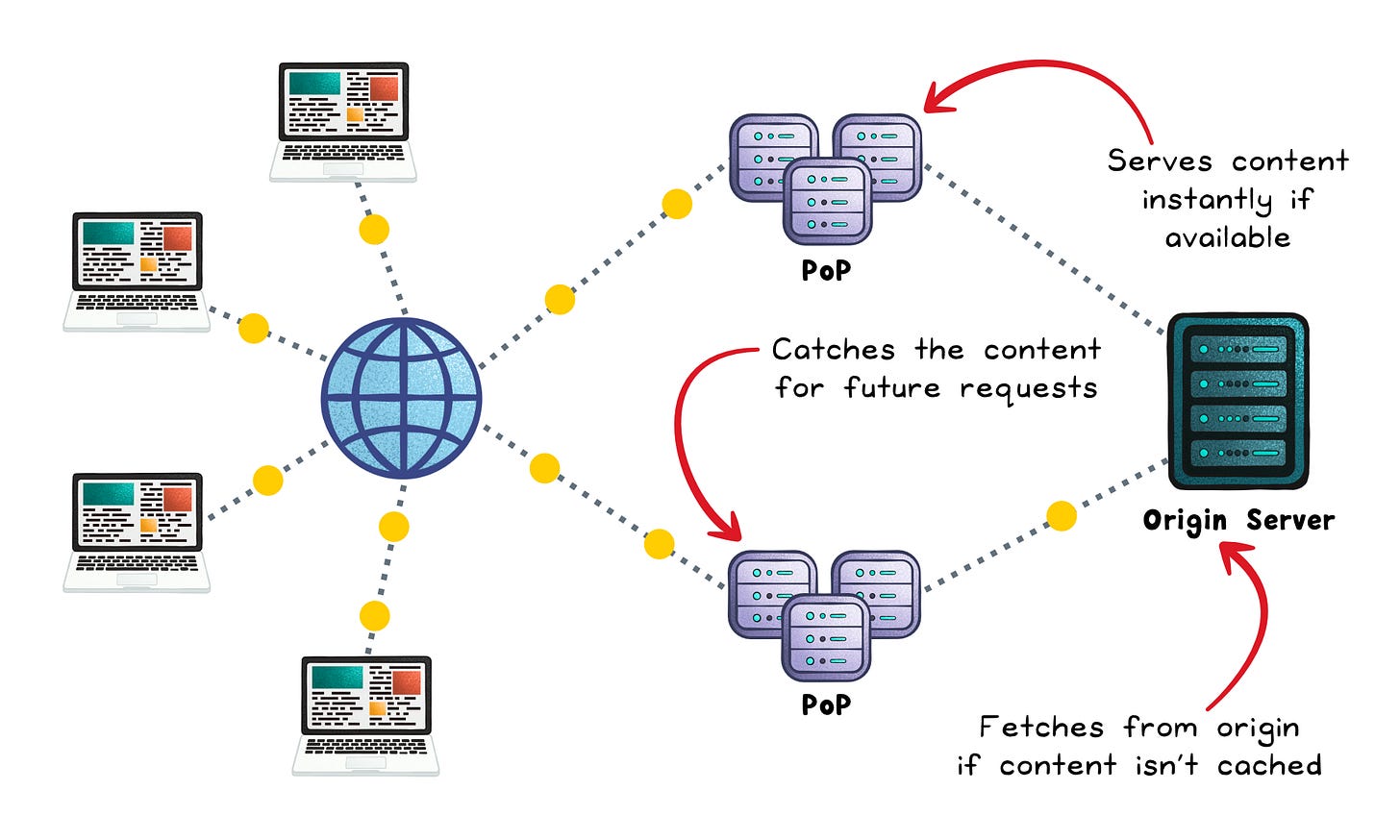

How CDNs Work

A Content Delivery Network (CDN) sits between your users and your origin server. Instead of every request crossing the globe, the CDN serves cached copies from nearby edge servers (Points of Presence, or PoPs).

Each PoP is a small data center built for low-latency delivery, strategically placed around the world.

Here’s what happens step by step:

User request hits the CDN domain → When a user visits your site, DNS routes the request to the nearest PoP using smart geographic or network-based routing.

The edge checks its cache → If the content already exists locally (a cache hit), it’s served instantly. Otherwise (a cache miss), the PoP fetches it once from your origin.

The edge stores and reuses → After the first fetch, the PoP caches the asset for future users so nearby requests skip the trip to your server.

Global replication happens naturally → As more users in different regions request the same content, additional PoPs cache their own copies.

Dynamic requests still flow through → Not everything is cacheable. Personalized content, authenticated APIs, and live data are forwarded securely to your origin, but they still benefit from the CDN’s optimized routing, TLS termination, and persistent connections.

Behind the scenes, CDNs also manage:

Smart routing → DNS or Anycast directs traffic to the nearest healthy edge and reroutes during congestion or outages.

Protocol optimization → CDNs manage HTTP/2 and HTTP/3, reuse connections, and apply compression to deliver faster than most origins.

Edge logic → Many CDNs run lightweight code at the edge: handling redirects, rewriting headers, or running simple compute (like authentication or A/B tests) without touching your backend.

Benefits of Using a CDN

A CDN does more than make pages load faster; it reshapes how your system handles scale, reliability, and cost.

Lower latency → Content is served from edge locations near users, cutting round-trip time and improving perceived speed.

Higher reliability → Traffic automatically reroutes to healthy edges, masking regional outages and origin downtime.

Origin offload → Static and repeat requests stay at the edge, reducing bandwidth and compute load on your backend.

Better scalability → Edge caching absorbs traffic spikes without extra servers.

Built-in security → Most CDNs provide DDoS protection, TLS termination, and bot filtering at the network edge.

Optimized delivery → Features like HTTP/3, Brotli compression, and connection reuse improve efficiency on real-world networks.

What to Cache and for How Long

Caching is about balance: keep what rarely changes close to users and only hit your origin when you must.

1. Static assets → Cache for a long time

Images, stylesheets, JavaScript bundles, and fonts rarely change. Cache them for weeks or months, and use versioned filenames (app.v2.js, logo.v3.png) so new versions fetch automatically without purges.

2. Semi-dynamic content → Cache briefly or validate

Pages like homefeeds or product lists update often but not constantly. Use short TTLs (30s–5m) or validation headers (ETag, Last-Modified) to revalidate instead of refetching.

3. Personalized or sensitive content → Don’t cache

Anything tied to a user or session should always come from the origin. Mark these with Cache-Control: private, no-store to prevent accidental edge caching.

4. APIs and JSON responses → Cache selectively

Public or read-heavy endpoints (like configuration, metadata, or lookup data) can safely be cached for a few seconds to a few minutes. That’s often enough to absorb traffic spikes without risking stale data.

For dynamic APIs, consider using conditional requests (ETag or If-None-Match) so the CDN or client can revalidate quickly instead of refetching full payload.

5. Error responses → Cache carefully

During outages, use stale-if-error rules to serve the last good response temporarily. It smooths over incidents without hiding deeper issues.

Common Mistakes (and When Not to Use a CDN)

A CDN can make your system faster, cheaper, and more reliable; but only when configured well.

Misuse it, and you’ll trade one problem for another.

What to Avoid

Long TTLs without versioning → If filenames stay the same, edge nodes keep serving stale versions long after an update. Always version assets (e.g.

main.v3.css) so new URLs trigger fresh fetches automatically.Noisy URLs → CDNs treat every unique URL as a separate object. Query strings create too many cache variations and quickly lower your hit ratio. Normalize cache keys and strip irrelevant parameters so repeated requests reuse the same cached item.

Exposed origin servers → Attackers can bypass the CDN entirely. Restrict origin access to CDN IPs or use authenticated origin pulls.

Aggressive global purges → Clearing the entire cache after every update triggers a rush of refill requests to your origin. Use versioned URLs and targeted invalidations to avoid origin stampedes.

When Not to Use (or Rely on) a CDN

Personalized or per-user traffic → Every response is unique, so caching won’t help. Use the CDN for routing, TLS offload, and compression, but let the origin handle the logic.

Strict data residency requirements → Some regions require data to stay local. If your CDN can’t guarantee regional confinement, skip edge caching for those requests.

Internal or low-latency systems → Within the same data center or VPC, a CDN adds extra hops without improving speed. Direct connections are faster and simpler.

Single-provider dependence → Relying entirely on one CDN creates a hidden single point of failure. For critical workloads, design a multi-CDN strategy or keep an origin fallback ready.

The Bigger Picture

A CDN starts as a performance fix; but its real impact goes deeper.

By shifting traffic away from your origin, you buy yourself headroom: fewer spikes, fewer late-night alerts, and more predictable scaling.

It’s also a cost win. Serving from the edge cuts bandwidth and compute at the origin, especially for heavy assets and high-read workloads. Your database, API, and storage services all breathe easier. In high-traffic environments, that translates directly into lower infrastructure costs and smaller scaling margins.

Then come the quiet benefits. CDNs terminate TLS, absorb DDoS floods, filter bad bots, and smooth regional routing; all before the traffic even reaches your app.

A CDN isn’t just a cache.

It’s a global distribution layer; one that makes your system faster, steadier, and safer without changing your code.

👋 If you liked this post → Like + Restack + Share to help others learn system design.

Subscribe to get high-signal, visual, and simple-to-understand system design articles straight to your inbox: