Top 5 Database Caching Strategies You Should Know

(5 Minutes) | Unpacking The Most Popular Database Caching Strategies

Free AI Code Reviews in your IDE Powered by CodeRabbit

Presented by CodeRabbit

CodeRabbit delivers free AI code reviews in VS Code, Cursor, and Windsurf. Instantly catches bugs and code smells, suggests refactorings, and delivers context aware feedback for every commit, all without configuration. Works with all major languages; trusted on 10M+ PRs across 1M repos.

Top 5 Database Caching Strategies You Should Know

Caching is a core performance optimization technique. Done right, it reduces latency, lowers database load, and improves throughput, without sacrificing availability.

But caching isn’t one-size-fits-all.

The right strategy depends on your system’s access patterns, consistency requirements, and tolerance for stale data.

Let’s dive into five of the most widely used caching strategies.

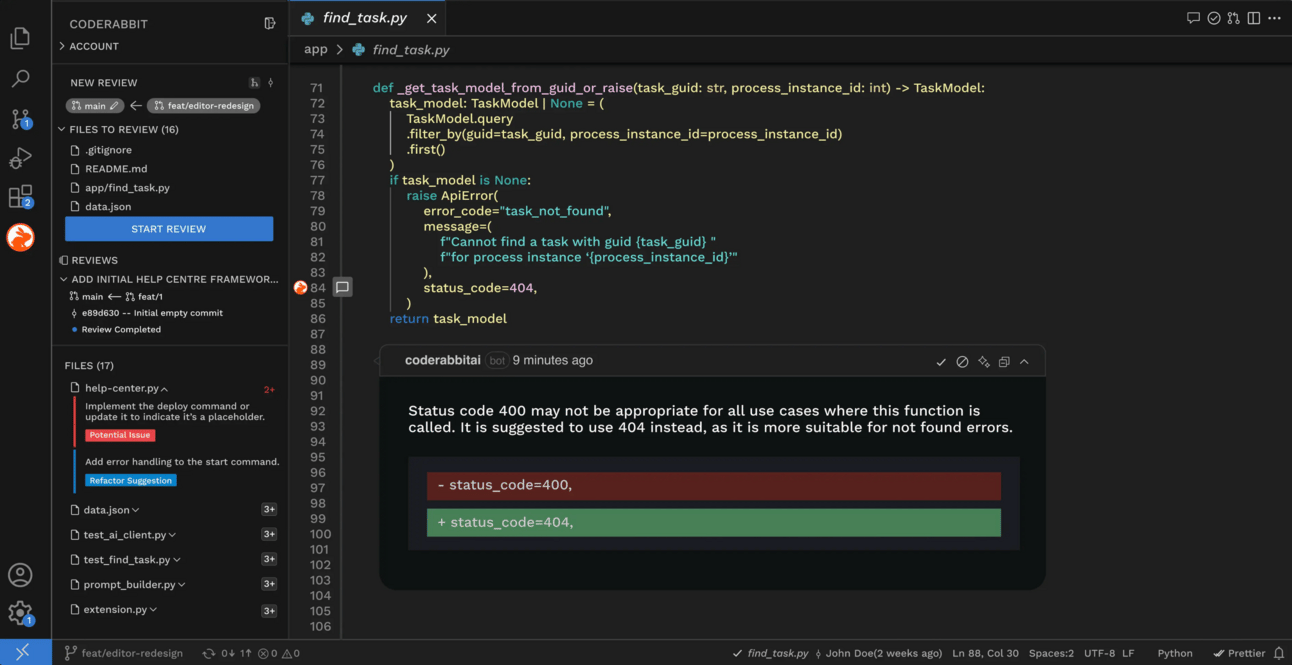

1) Cache-Aside

In a cache-aside strategy, the application explicitly manages reads and writes to the cache.

It queries the cache first, and on a cache miss, it fetches data from the database, returns it, and updates the cache.

The cache only stores data after it’s been read by the app.

Pros:

Total control—great for irregular access patterns and dynamic data.

Only caches what’s actually requested.

Cons:

You’re on the hook for managing cache invalidation and consistency.

Adds extra logic to application code.

Cache-aside shines in systems where usage patterns are unpredictable or data freshness varies. However, beware that sloppy cache management can lead to stale reads or wasted memory.

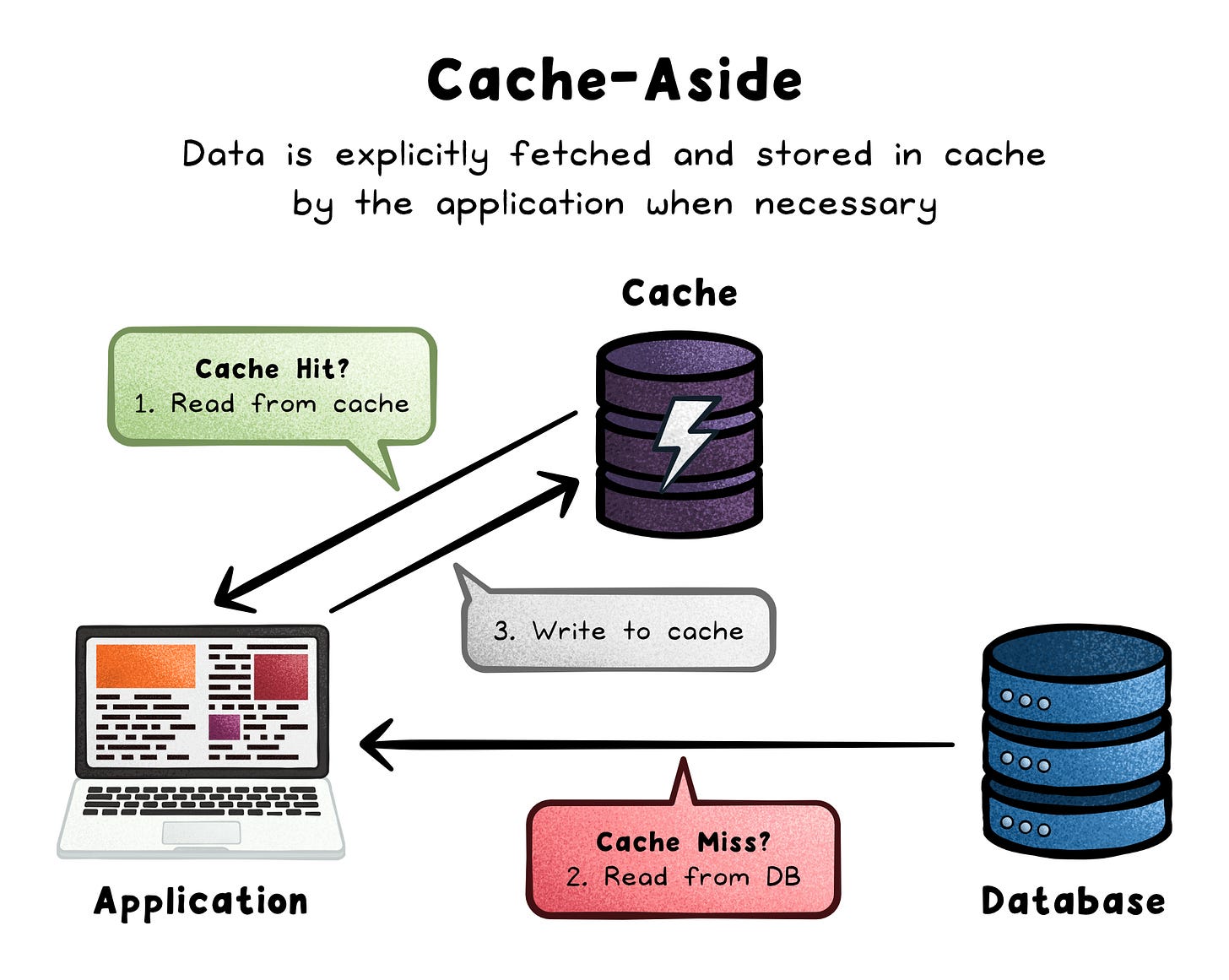

2) Write-Through

In a write-through strategy, every write updates both the cache and the database at the same time. This ensures the cache always stays in sync with the database.

Pros:

Data is always consistent between cache and database.

Simplifies reads—no risk of stale cache data.

Cons:

Higher write latency – Every write incurs the cost of writing to two systems (cache and database).

Cache can be flooded with infrequently read data.

Best used when data integrity and consistency are critical—think e-commerce transactions or financial apps where stale reads aren’t an option.

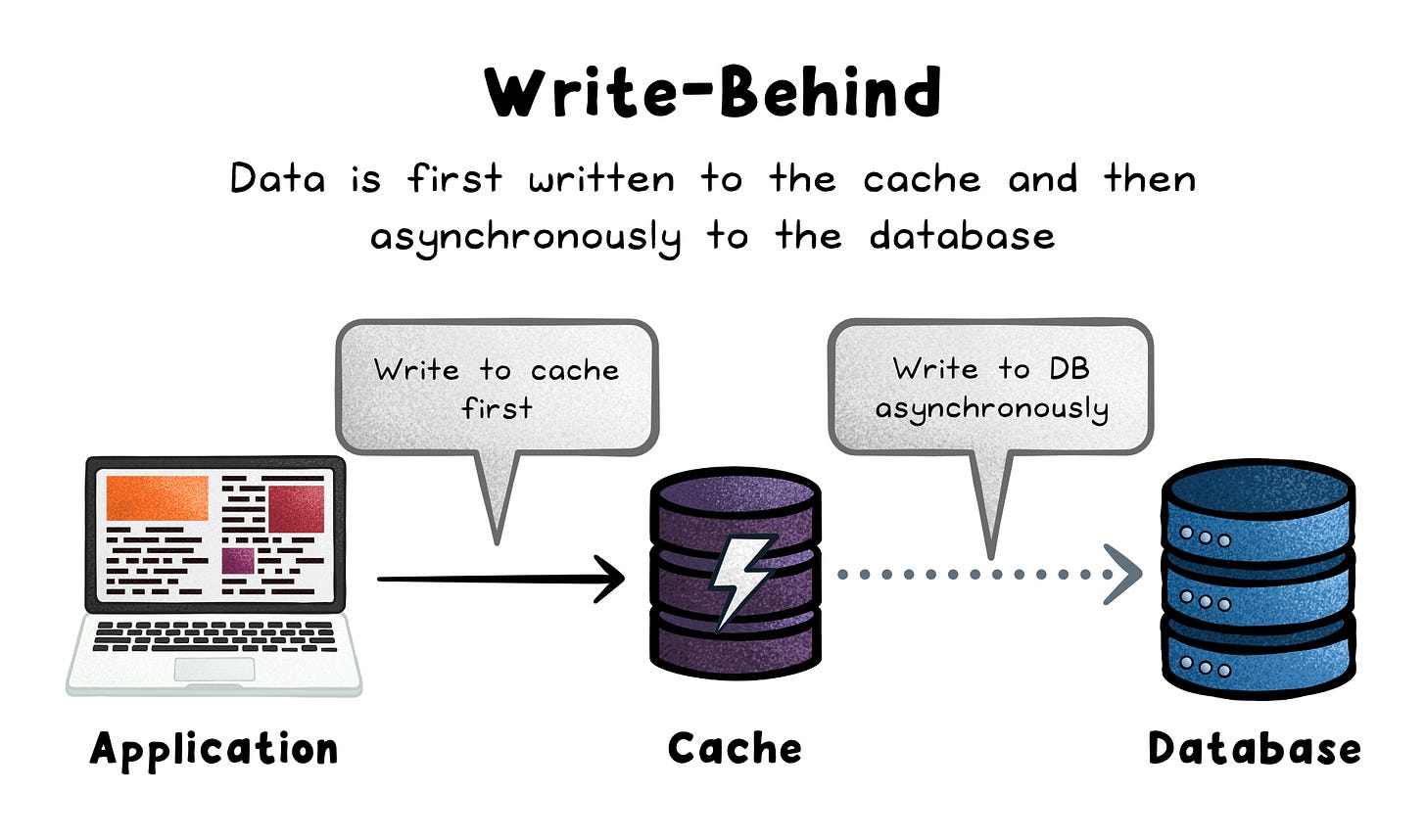

3) Write-Behind (Write-Back)

Write-behind (or write-back) caching updates the cache first and the database later in the background. “Dirty” data is flushed based on a schedule or specific triggers like time or batch size.

Pros:

Supercharges write performance—perfect for high-write workloads.

Relieves the database from immediate pressure.

Cons:

Risk of data loss if the cache node crashes before the database syncs.

Can complicate troubleshooting if writes lag too long.

Write-behind is ideal for apps where slight delays in database updates are tolerable—like activity feeds, logs, or ephemeral data.

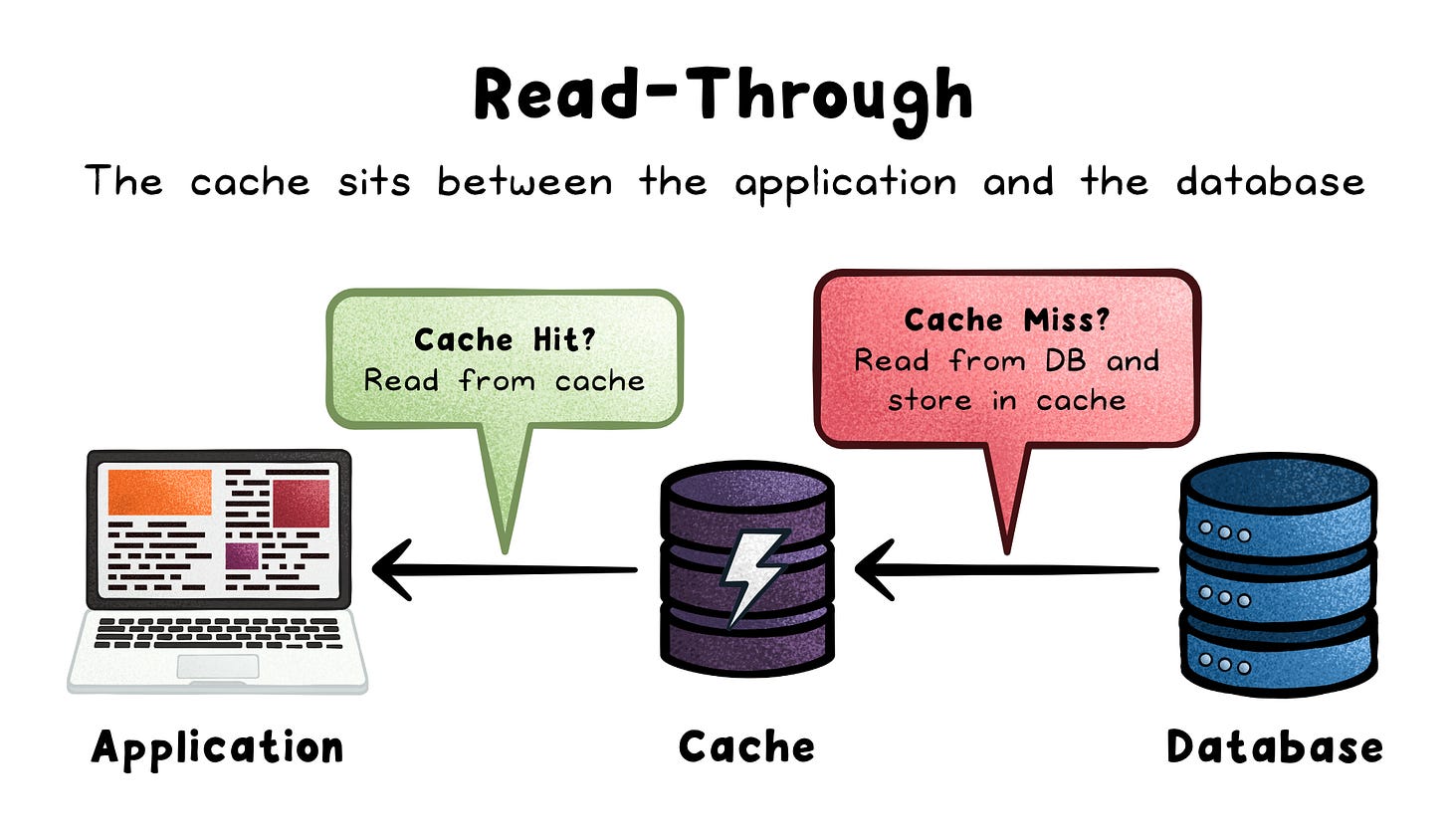

4) Read-Through

A read-through cache acts as a middle layer. The application queries the cache directly, and on a miss, the cache fetches the data from the database, stores it in the cache, and returns it to the application.

Pros:

Application code is simplified—no need for explicit cache logic on reads.

Reduces database load for repeat queries.

Cons:

Initial cache misses still hit the database, causing a slight delay.

Risk of stale data if underlying data changes frequently and no expiration policy is in place.

This is a solid choice for read-heavy applications with stable data, like content libraries or product catalogues.

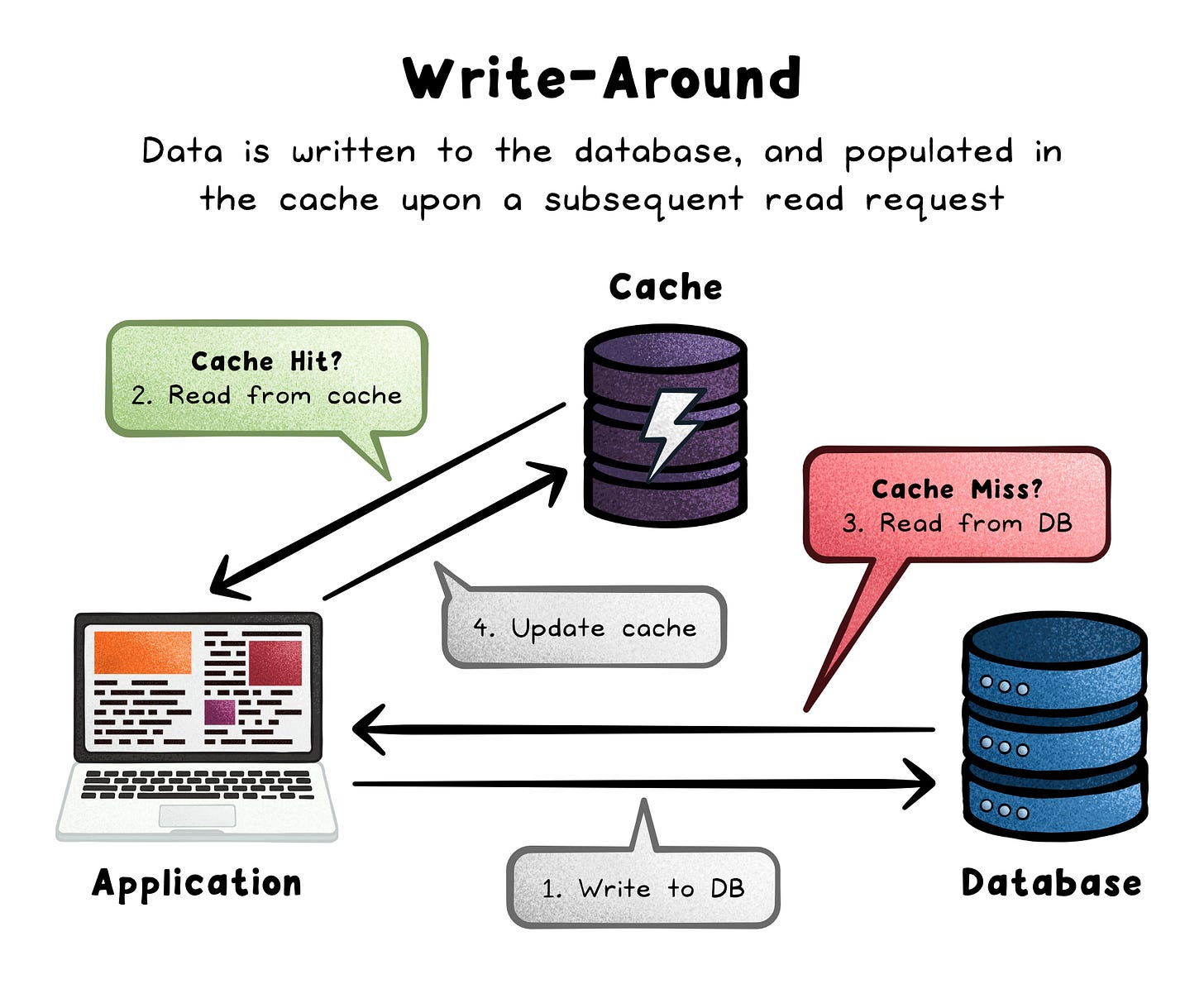

5) Write-Around

Write-around caching skips the cache during writes, sending data straight to the database.

Data enters the cache only when it’s read later. This means new or updated data isn’t cached until requested.

Pros:

Keeps the cache lean by avoiding writes that may never be read.

Prevents unnecessary churn for write-heavy scenarios.

Cons:

First reads after a write always miss the cache, causing read latency.

Inconsistent performance if reads for new data spike unexpectedly.

Best for apps with frequent writes and infrequent reads, like analytics logs or activity events.

Choosing the Right Strategy

Each caching strategy comes with its own trade-offs. To decide which one fits your system, consider:

Read vs write ratio: Is your workload read-heavy, write-heavy, or balanced?

Consistency requirements: Can your system tolerate stale data, or is strict consistency a must?

Operational complexity: How much logic are you willing to manage in the application vs the cache layer?

Understanding these trade-offs is key to making caching an enabler, not a liability.

Final Thoughts

Caching isn’t just a performance boost, it’s a critical part of system design. The right strategy reduces load, improves user experience, and scales with your needs.

Whether you're designing for high throughput, low latency, or data integrity, matching your caching strategy to your access patterns will help your application perform predictably under pressure.

Subscribe to get simple-to-understand, visual, and engaging system design articles straight to your inbox: